Artificial intelligence is potentially a game changer for science and industry, promising efficiencies that can accelerate research, improve productivity, and enable major advances. It is also an energy hog. According to the July 2024 Department of Energy (DOE) report Recommendations on Powering Artificial Intelligence and Data Center Infrastructure, “Data center power demands are growing rapidly…A significant factor today and in the medium-term (2030+) is expanding power demand of AI applications. Advancements in both hardware and software have enabled development of large language models (LLMs) that now approach human capabilities on a wide range of valuable tasks. As these models have grown larger, so have concerns about sizeable future increases in the energy to deploy LLMs as AI tools become more deeply woven into society.“

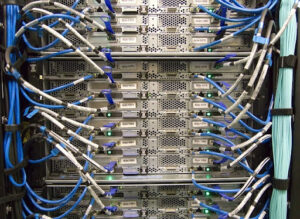

Arman Shehabi, who heads the data center analysis team in the Energy Technologies Area, estimates, “A typical server rack with a bunch of conventional servers in them used about three to five kilowatts of power. And now, with the new AI specialized servers, those racks are using as much as a hundred kilowatts.”

It’s a problem that Berkeley Lab has been working on while also looking to take advantage of the potential of AI. “There are great opportunities for data center energy efficiency improvements in a world that’s being strained by the needs of AI, because it’s uncharted territory,” said Arman. “And it’s a topic on which Berkeley Lab can make a difference. Our areas of expertise in materials sciences, computing sciences, energy analysis, building energy efficiency, and facilities such as NERSC are just some of the capabilities that we can bring to bear on the challenge,” he continued.

Arman notes that Berkeley Lab has a pretty long history of working on data center energy efficiency. In the early 2000s, following the dot com boom, at the request of the Environmental Protection Agency, he and his team authored one of the first reports on data center energy use increase. Another Lab study followed in 2016, exploring the impact of data center energy efficiency measures such as better thermal management and the reduction of the number of idle servers. Arman and Sarah Smith, another researcher in ETA’s Sustainable Energy Systems group, are now working on a report to Congress on the implications of AI growth on data center energy usage, due at the end of this year.

On the technical assistance side, the Lab has also conducted evaluations on federal and other data centers and provides training for data center operators to be more efficient.

DOE Zeroes In On Data Center Energy Efficiency

Following the July report, the DOE looked at R&D opportunities to address the issue.

Load flexibility was one of the first areas that the DOE wanted to explore – ways that data centers could scale back their electricity demand from the grid during peak hours. The DOE’s Office of Energy Efficiency and Renewable Energy (EERE) asked Berkeley Lab to host a workshop on load flexibility for data centers. On Nov. 1, about 50 participants gathered for a hybrid half-day workshop, including representatives from major data center companies such as Amazon, Google, Meta, and Microsoft, electric utilities and regulators, the DOE, other key industry stakeholders, and national lab researchers. Dominion Energy, an electricity provider on the east coast, talked about the challenges of meeting the power demand from a growing number of data centers.

“The goal of the workshop was to bring together all of the key stakholders to discuss the needs and opportunities for flexible energy demand in data centers, and how the DOE and the national labs can help,” said Tom Kirchstetter, director of ETA’s Energy Analysis & Environmental Impacts Division.

How to Get Involved

In addition to the external workshop, the ETA team has also been convening monthly internal meetings for researchers across Berkeley Lab who are doing work relevant to improving the energy efficiency of data centers. The goal of these internal meetings is to better understand capabilities across the Lab and to develop ideas for multi-disciplinary research to address this challenge.

A kickoff meeting in August, attended by researchers and staff from ETA, CSA, and ESA, featured internal and external presenters on the impact of AI scaling on electricity demand and energy efficiency. At the September meeting, Arman shared his team’s methodology for estimating data center energy use with the rise of AI. The November meeting featured an analysis of the power use of NERSC’s Perlmutter system.

“Through these meetings, we hope to better understand and articulate our capabilities,” said Tom. “The DOE has expressed an interest in solutions to meet the growing power demand for AI in data centers. With our expertise in data center energy use, we are in a good position to provide leadership on this topic.”

“It’s fantastic to see the breadth of research and interest in this topic at the Lab. It’s an area in which we can very honestly say we work on an issue from end to end, from discovery science to applied science to electricity markets research,” said Tom.

To participate in the internal monthly meetings on data center energy use, contact Tom Kirchstetter at TWKirchstetter@lbl.gov. The next meeting is scheduled for December 17.

For more information:

Center of Expertise for Energy Efficiency in Data Centers

United States Data Center Energy Usage Report, published 2016